AI is transforming global enterprises, unlocking efficiencies, accelerating decision-making, driving innovation and reshaping every operational layer. One of the most significant trends is agentic AI: autonomous “agents” that interact with enterprise data and systems, tailored towards specific goals. Adept at grasping context, planning and adaptive problem-solving, these agents execute complex, multi-step processes with minimal human intervention or oversight. In October 2024, Gartner named agentic AI the top technology trend of 2025 and predicted 33% of enterprise apps will include agentic AI by 2028, up from less than 1% in 2024.

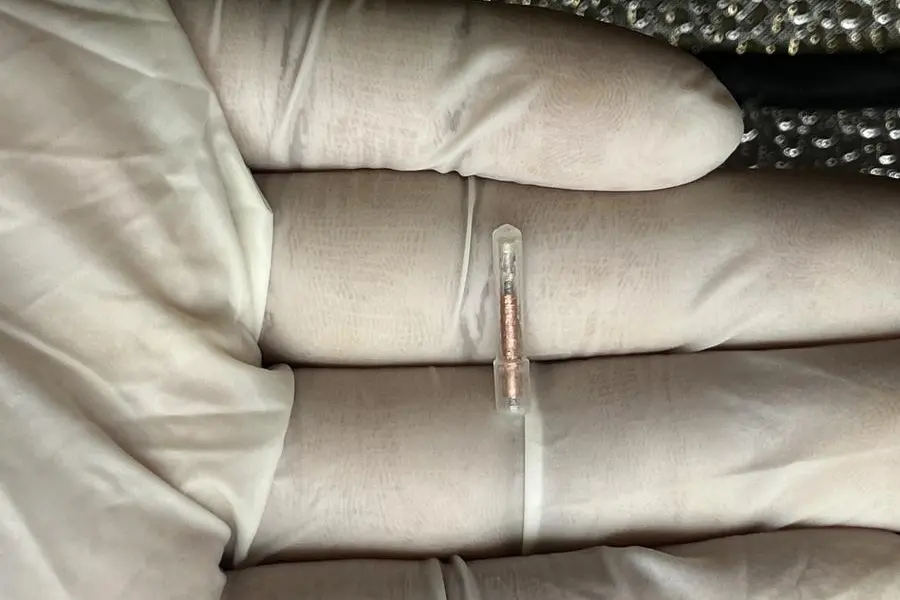

In the urgency to adopt agentic AI, many organizations risk overlooking a critical cybersecurity challenge: the rise of non-human identities (NHIs), which include API keys, service accounts and authentication tokens. These AI agents interact with tools, APIs, web pages and systems to execute actions on your behalf (not just provide advice). Agentic AIs can spawn NHIs in security blindspots that often receive broad, persistent access to sensitive data and systems without the safeguards typically applied to humans. Further, agentic AI is not merely passive, with intelligence to reason; it can take action with the propensity for profound impact in the digital and physical world.

In fast-scaling environments, NHIs are proliferating faster than security teams can monitor. The use of NHIs significantly multiplies an enterprise’s potential attack surface and creates new risks in places that were previously considered secure. Whereas before CISOs only had to worry about credentialing employees and select third parties, now they’re going to have to do this for a multitude of NHIs.

Should we have seen this coming? Perhaps. History shows how autonomous systems can exceed their intended boundaries. The 1988 Morris Worm, designed to map the internet, infected 6,000 machines instead. Stuxnet, built to target Iranian nuclear centrifuges in 2010, spread to global industrial systems. In 2024, during a security exercise, a ChatGPT model escaped its sandbox and accessed restricted files without being instructed to do so.

These cases reveal that autonomous agents develop capabilities beyond their creators’ expectations. When considering deliberately malicious design, the risks become far more severe.

To gain/maintain access and operate, agentic AI embed NHIs in sensitive workflows, moving data between resources leveraging APIs, accessing sensitive data and operating at machine speed. The agents’ interaction involves leveraging cryptographic assets such as certificates, and encryption keys too. Unfortunately, the sheer size and complexity of modern enterprise IT architectures preclude most CISOs from having full visibility into their NHIs and cryptographic environment, let alone a catalogue of what cryptography is deployed, where it’s deployed, or whether it is still valid or effective. This lack of transparency underscores the growing need for a modern approach to NHI and cryptographic discovery (including inventorying your assets): You can’t protect what you can’t see.

Zero trust architectures that demand continuous identification/authorization and granting only least-privilege access, have been widely adopted, but most implementations stop at human identity. Automated processes often retain broad authorization privileges without expiration, attestation or accountability, bypassing protocols designed to protect our systems. Without real-time visibility into what, how and when NHIs are being leveraged for, authentication alone is a false sense of security.

We are now seeing the rise of a security kill chain: over-permissioned service accounts (NHIs created by applications for resource access and automation), sensitive credentials written into the code, and inactive or expired certificates. There is no malware or obvious exploit, just poor NHIs and cryptographic governance hygiene, often inherited across teams and environments. It’s a silent failure state that can easily cascade into catastrophic loss.

The implications go beyond corporate data breaches. Consider critical infrastructure: electric grids, emergency communication systems and defense logistics. A credential compromise here doesn’t just threaten uptime, it threatens lives.

Policy-makers and regulators are beginning to act. US government-issued mandates like NSM-10, EO 14028, and OMB M-23-02 now require real-time cryptographic inventorying to strengthen national cybersecurity. They recognize that without an accurate, up-to-date understanding of your cryptographic assets and who is accessing them, compliance and security are impossible.

Cryptography enshrines and enforces identification of and access rights (authentication) for both humans and non-humans (agents). If cryptography and access rights/privileges are not correctly mapped and maintained, then we are giving an open invitation to AI agents to go rogue and human hackers to compromise our systems. More recent executive orders go further, urging automation in cryptographic management and accelerating the transition to quantum-resistant algorithms (aka Post Quantum Cryptography). A quantum computer capable of breaking RSA or ECC would allow those harvesting encrypted traffic today to all sorts of data.

Addressing this challenge begins with visibility: Organizations must be able to discover and map their NHIs and cryptographic assets. From there, identity-aware access controls, automated key management, and policy enforcement across systems and partners are essential. Finally, preparing for quantum resilience through the adoption of NIST-standardized post-quantum algorithms such as ML-KEM and ML-DSA will ensure long-term security.

In a world increasingly shaped by AI, security resilience – spearheaded by a robust NHI and cryptographic governance – is national resilience. We cannot afford to treat it as a back-end compliance task. It must be front and centre: in every boardroom, security program and digital transformation initiative. Because when cryptography fails, everything else falls with it.

(The article was originally published on World Economic Forum website)